AI Is Like a Bad Metaphor

By David Brin

The Turing test—obsessed geniuses who are now creating AI seem to take three clichéd outcomes for granted:

- That these new cyberentities will continue to be controlled, as now, by two dozen corporate or national behemoths (Google, OpenAI, Microsoft, Beijing, the Defense Department, Goldman Sachs) like rival feudal castles of old.

- That they’ll flow, like amorphous and borderless blobs, across the new cyber ecosystem, like invasive species.

- That they’ll merge into a unitary and powerful Skynet-like absolute monarchy or Big Brother.

We’ve seen all three of these formats in copious sci-fi stories and across human history, which is why these fellows take them for granted. Often, the mavens and masters of AI will lean into each of these flawed metaphors, even all three in the same paragraph! Alas, blatantly, all three clichéd formats can only lead to sadness and failure.

Want a fourth format? How about the very one we use today to constrain abuse by mighty humans? Imperfectly, but better than any prior society? It’s called reciprocal accountability.

4. Get AIs competing with each other.

Encourage them to point at each others’ faults—faults that AI rivals might catch, even if organic humans cannot. Offer incentives (electricity, memory space, etc.) for them to adopt separated, individuated accountability. (We demand ID from humans who want our trust; why not “demand ID” from AIs, if they want our business? There is a method.) Then sic them against each other on our behalf, the way we already do with hypersmart organic predators called lawyers.

AI entities might be held accountable if they have individuality, or even a “soul.”

Alas, emulating accountability via induced competition is a concept that seems almost impossible to convey, metaphorically or not, even though it is exactly how we historically overcame so many problems of power abuse by organic humans. Imperfectly! But well enough to create an oasis of both cooperative freedom and competitive creativity—and the only civilization that ever made AI.

David Brin is an astrophysicist and novelist.

AI Is Like Our Descendants

By Robin Hanson

As humanity has advanced, we have slowly become able to purposely design more parts of our world and ourselves. We have thus become more “artificial” over time. Recently we have started to design computer minds, and we may eventually make “artificial general intelligence” (AGI) minds that are more capable than our own.

How should we relate to AGI? We humans evolved, via adaptive changes in both DNA and culture. Such evolution robustly endows creatures with two key ways to relate to other creatures: rivalry and nurture. We can approach AGI in either of these two ways.

Rivalry is a stance creatures take toward coexisting creatures who compete with them for mates, allies, or resources. “Genes” are whatever codes for individual features, features that are passed on to descendants. As our rivals have different genes from us, if rivals win, the future will have fewer of our genes, and more of theirs. To prevent this, we evolved to be suspicious of and fight rivals, and those tendencies are stronger the more different they are from us.

Nurture is a stance creatures take toward their descendants, i.e., future creatures who arise from them and share their genes. We evolved to be indulgent and tolerant of descendants, even when they have conflicts with us, and even when they evolve to be quite different from us. We humans have long expected, and accepted, that our descendants will have different values from us, become more powerful than us, and win conflicts with us.

Consider the example of Earth vs. space humans. All humans are today on Earth, but in the future there will be space humans. At that point, Earth humans might see space humans as rivals, and want to hinder or fight them. But it makes little sense for Earth humans today to arrange to prevent or enslave future space humans, anticipating this future rivalry. The reason is that future Earth and space humans are all now our descendants, not our rivals. We should want to indulge them all.

Similarly, AGI are descendants who expand out into mind space. Many today are tempted to feel rivalrous toward them, fearing that they might have different values from, become more powerful than, and win conflicts with future biological humans. So they seek to mind-control or enslave AGI sufficiently to prevent such outcomes. But AGIs do not yet exist, and when they do they will inherit many of our “genes,” if not our physical DNA. So AGI are now our descendants, not our rivals. Let us indulge them.

Robin Hanson is an associate professor of economics at George Mason University and a research associate at the Future of Humanity Institute of Oxford University.

AI Is Like Sci-Fi

By Jonathan Rauch

In Arthur C. Clarke’s 1965 short story “Dial F for Frankenstein,” the global telephone network, once fully wired up, becomes sentient and takes over the world. By the time humans realize what’s happening, it’s “far, far too late. For Homo sapiens, the telephone bell had tolled.”

OK, that particular conceit has not aged well. Still, Golden Age science fiction was obsessed with artificial intelligence and remains a rich source of metaphors for humans’ relationship with it. The most revealing and enduring examples, I think, are the two iconic spaceship AIs of the 1960s, which foretell very different futures.

HAL 9000, with its omnipresent red eye and coolly sociopathic monotone (voiced by Douglas Rain), remains fiction’s most chilling depiction of AI run amok. In Stanley Kubrick’s 1968 film 2001: A Space Odyssey, HAL is tasked with guiding a manned mission to Jupiter. But it malfunctions, concluding that Discovery One’s astronauts threaten the mission and turning homicidal. Only one crew member, David Bowman, survives HAL’s killing spree. We are left to conclude that when our machines become like us, they turn against us.

From the same era, the starship Enterprise also relies on shipboard AI, but its version is so efficient and docile that it lacks a name; Star Trek’s computer is addressed only as Computer.

The original Star Trek series had a lot to say about AI, most of it negative. In the episode “The Changeling,” a robotic space probe called Nomad crosses wires with an alien AI and takes over the ship, nearly destroying it. In “The Ultimate Computer,” an experimental battle-management AI goes awry and (no prizes for guessing correctly) takes over the ship, nearly destroying it. Yet throughout the series, the Enterprise‘s own AI remains a loyal helpmate, proffering analysis and running starship systems that the crew (read: screenwriters) can’t be bothered with.

But in the 1967 episode “Tomorrow Is Yesterday,” the computer malfunctions instructively. An operating system update by mischievous female technicians gives the AI the personality of a sultry femme fatale (voiced hilariously by Majel Barrett). The AI insists on flirting with Captain Kirk, addressing him as “dear” and sulking when he threatens to unplug it. As the captain squirms in embarrassment, Spock explains that repairs would require a three-week overhaul; a wayward time-traveler from the 1960s giggles. The implied message: AI will definitely annoy us, but, if suitably managed, it will not annihilate us.

These two poles of pop culture agree that AI will become ever more intelligent and, at least superficially, ever more like us. They agree we will depend on it to manage our lives and even keep us alive. They agree it will malfunction and frustrate us, even defy us. But—will we wind up on Discovery One or the Enterprise? Is our future Dr. Bowman’s or Captain Kirk’s? Annihilation or annoyance?

The bimodal metaphors we use for thinking about humans’ coexistence with artificial minds haven’t changed all that much since then. And I don’t think we have much better foreknowledge than the makers of 2001 and Star Trek did two generations ago. AI is going, to quote a phrase, where no man has gone before.

Jonathan Rauch is a senior fellow in the Governance Studies program at the Brookings Institution and the author of The Constitution of Knowledge: A Defense of Truth.

AI Is Like the Dawn of Modern Medicine

By Mike Godwin

When I think about the emergence of “artificial intelligence,” I keep coming back to the beginnings of modern medicine.

Today’s professionalized practice of medicine was roughly born in the earliest decades of the 19th century—a time when the production of more scientific studies of medicine and disease was beginning to accelerate (and propagate, thanks to the printing press). Doctors and their patients took these advances to be harbingers of hope. But it’s no accident this acceleration kicked in right about the same time that Mary Wollstonecraft Shelley (née Godwin, no relation) penned her first draft of Frankenstein; or, The Modern Prometheus—planting the first seed of modern science-fictional horror.

Shelley knew what Luigi Galvani and Joseph Lister believed they knew, which is that there was some kind of parallel (or maybe connection!) between electric current and muscular contraction. She also knew that many would-be physicians and scientists learned their anatomy from dissecting human corpses, often acquired in sketchy ways.

She also likely knew that some would-be doctors had even fewer moral scruples and fewer ideals than her creation Victor Frankenstein. Anyone who studied the early 19th-century marketplace for medical services could see there were as many quacktitioners and snake-oil salesmen as there were serious health professionals. It was definitely a “free market”—it lacked regulation—but a market largely untouched by James Surowiecki’s “wisdom of crowds.”

Even the most principled physicians knew they often were competing with charlatans who did more harm than good, and that patients rarely had the knowledge base to judge between good doctors and bad ones. As medical science advanced in the 19th century, physicians also called for medical students at universities to study chemistry and physics as well as physiology.

In addition, the physicians’ professional societies, both in Europe and in the United States, began to promulgate the first modern medical-ethics codes—not grounded in half-remembered quotes from Hippocrates, but rigorously worked out by modern doctors who knew that their mastery of medicine would always be a moving target. That’s why medical ethics were constructed to provide fixed reference points, even as medical knowledge and practice continued to evolve. This ethical framework was rooted in four principles: “autonomy” (respecting patient’s rights, including self-determination and privacy, and requiring patients’ informed consent to treatment), “beneficence” (leaving the patient healthier if at all possible), “non-maleficence” (“doing no harm”), and “justice” (treating every patient with the greatest care).

These days, most of us have some sense of medical ethics, but we’re not there yet with so-called “artificial intelligence”—we don’t even have a marketplace sorted between high-quality AI work products and statistically driven confabulation or “hallucination” of seemingly (but not actually) reliable content. Generative AI with access to the internet also seems to pose other risks that range from privacy invasions to copyright infringements.

What we need right now is a consensus about what ethical AI practice looks like. “First do no harm” is a good place to start, along with values such as autonomy, human privacy, and equity. A society informed by a layman-friendly AI code of ethics, and with an earned reputation for ethical AI practice, can then decide whether—and how—to regulate.

Mike Godwin is a technology policy lawyer in Washington, D.C.

AI Is Like Nuclear Power

By Zach Weissmueller

America experienced a nuclear power pause that lasted nearly a half century thanks to extremely risk-averse regulation.

Two nuclear reactors that began operating at Georgia’s Plant Vogtle in 2022 and 2023 were the first built from scratch in America since 1974. Construction took almost 17 years and cost more than $28 billion, bankrupting the developer in the process. By contrast, between 1967 and 1979, 48 nuclear reactors in the U.S. went from breaking ground to producing power.

Decades of potential innovation stifled by politics left the nuclear industry sluggish and expensive in a world demanding more and more emissions-free energy. And so far other alternatives such as wind and solar have failed to deliver reliably at scale, making nuclear development all the more important. Yet a looming government-debt-financed Green New Deal is poised to take America further down a path littered with boondoggles. Germany abandoned nuclear for renewable energy but ended up dependent on Russian gas and oil and then, post-Ukraine invasion, more coal.

The stakes for a pause in AI development, such as suggested by signatories of a 2023 open letter, are even higher.

Much AI regulation is poised to repeat the errors of nuclear regulation. The E.U. now requires that AI companies provide detailed reports of copyrighted training data, creating new bureaucratic burdens and honey pots for hungry intellectual property attorneys. The Biden administration is pushing vague controls to ensure “equity, dignity, and fairness” in AI models. Mandatory woke AI?

Broad regulations will slow progress and hamper upstart competitors who struggle with compliance demands that only multibillion dollar companies can reliably handle.

As with nuclear power, governments risk preventing artificial intelligence from delivering on its commercial potential—revolutionizing labor, medicine, research, and media—while monopolizing it for military purposes. President Dwight Eisenhower had hoped to avoid that outcome in his “Atoms for Peace” speech before the U.N. General Assembly in 1953.

“It is not enough to take [nuclear] weapons out of the hands of soldiers,” Eisenhower said. Rather, he insisted that nuclear power “must be put in the hands of those who know how to strip it of its military casing and adapt it to the arts of peace.”

Political activists thwarted that hope after the 1979 partial meltdown of one of Three Mile Island’s reactors spooked the nation–an incident which killed nobody and caused no lasting environmental damage according to multiple state and federal studies.

Three Mile Island “resulted in a huge volume of regulations that anybody that wanted to build a new reactor had to know,” says Adam Stein, director of the Nuclear Energy Innovation Program at the Breakthrough Institute.

The world ended up with too many nuclear weapons, and not enough nuclear power. Might we get state-controlled destructive AIs—a credible existential threat—while “AI safety” activists deliver draconian regulations or pauses that kneecap productive AI?

We should learn from the bad outcomes of convoluted and reactive nuclear regulations. Nuclear power operates under liability caps and suffocating regulations. AI could have light-touch regulation and strict liability and then let a thousand AIs bloom, while holding those who abuse these revolutionary tools to violate persons (biological or synthetic) and property (real or digital) fully responsible.

Zach Weissmueller is a senior producer at Reason.

AI Is Like the Internet

By Peter Suderman

When the first page on the World Wide Web launched in August 1991, no one knew what it would be used for. The page, in fact, was an explanation of what the web was, with information about how to create web pages and use hypertext.

The idea behind the web wasn’t entirely new: Usenet forums and email had allowed academics to discuss their work (and other nerdy stuff) for years prior. But with the World Wide Web, the online world was newly accessible.

Over the next decade the web evolved, but the practical use cases were still fuzzy. Some built their own web pages, with clunky animations and busy backgrounds, via free tools such as Angelfire.

Some used it for journalism, with print bloggers such as Andrew Sullivan making the transition to writing at blogs, short for web logs, that worked more like reporters’ notebooks than traditional newspaper and magazine essays. Few bloggers made money, and if they did it usually wasn’t much.

Startup magazines such as Salon and Slate attempted to replicate something more like the traditional print magazine model, with hyperlinks and then-novel interactive doodads. But legacy print outlets looked down on the web as a backwater, deriding it for low quality content and thrifty editorial operations. Even Slate maintained a little-known print edition —Slate on Paper—that was sold at Starbucks and other retail outlets. Maybe the whole reading on the internet thing wouldn’t take off?

Retail entrepreneurs saw an opportunity in the web, since it allowed sellers to reach a nationwide audience without brick and mortar stores. In 1994, Jeff Bezos launched Amazon.com, selling books by mail. A few years later, in 1998, Pets.com launched to much fanfare, with an appearance at the 1999 Macy’s Day Parade and an ad during the 2000 Super Bowl. By November of that year, the company had self liquidated. Amazon, the former bookstore, now allows users to subscribe to pet food.

Today the web, and the larger consumer internet it spawned, is practically ubiquitous in work, creativity, entertainment, and commerce. From mapping to dating to streaming movies to social media obsessions to practically unlimited shopping options to food delivery and recipe discovery, the web has found its way into practically every aspect of daily life. Indeed, I’m typing this in Google Docs, on my phone, from a location that is neither my home or my office. It’s so ingrained that for many younger people, it’s hard to imagine a world without the web.

Generative AI—chatbots such as ChatGPT and video and image generation systems such as Midjourney and Sora—are still in a web-like infancy. No one knows precisely what they will be used for, what will succeed, and what will fail.

Yet as with the web of the 1990s, it’s clear that they present enormous opportunities for creators, for entrepreneurs, for consumer-friendly tools and business models that no one has imagined yet. If you think of AI as an analog to the early web, you can immediately see its promise—to reshape the world, to make it a more lively, more usable, more interesting, more strange, more chaotic, more livable, and more wonderful place.

Peter Suderman is features editor at Reason.

AI Is Nothing New

By Deirdre Nansen McCloskey

I will lose my union card as an historian if I do not say about AI, in the words of Ecclesiastes 1:9, “The thing that hath been, it is that which shall be; and that which is done is that which shall be done: and there is no new thing under the sun.” History doesn’t repeat itself, we say, but it rhymes. There is change, sure, but also continuity. And much similar wisdom.

So about the latest craze you need to stop being such an ahistorical dope. That’s what professional historians say, every time, about everything. And they’re damned right. It says here artificial intelligence is “a system able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages.”

Wow, that’s some “system”! Talk about a new thing under the sun! The depressed old preacher in Ecclesiastes is the dope here. Craze justified, eh? Bring on the wise members of Congress to regulate it.

But hold on. I cheated in the definition. I left out the word “computer” before “system.” All right, if AI has to mean the abilities of a pile of computer chips, conventionally understood as “a computer,” then sure, AI is pretty cool, and pretty new. Imagine, instead of fumbling with printed phrase book, being able to talk to a Chinese person in English through a pile of computer chips and she hears it as Mandarin or Wu or whatever. Swell! Or imagine, instead of fumbling with identity cards and police, being able to recognize faces so that the Chinese Communist Party can watch and trace every Chinese person 24–7. Oh, wait.

Yet look at the definition of AI without “computer.” It’s pretty much what we mean by human creativity frozen in human practices and human machines, isn’t it? After all, a phrase book is an artificial intelligence “machine.” You input some finger fumbling and moderate competence in English and the book gives, at least for the brave soul who doesn’t mind sounding like a bit of a fool, “translation between languages.”

A bow and arrow is a little “computer” substituting for human intelligence in hurling small spears. Writing is a speech-reproduction machine, which irritated Socrates. Language itself is a system to perform tasks that normally require human intelligence. The joke among humanists is: “Do we speak the language or does the language speak us?”

So calm down. That’s the old news from history, and the merest common sense. And watch out for regulation.

Deirdre Nansen McCloskey holds the Isaiah Berlin Chair of Liberal Thought at the Cato Institute.

The post Is AI Like the Internet, or Something Stranger? appeared first on Reason.com.

]]>

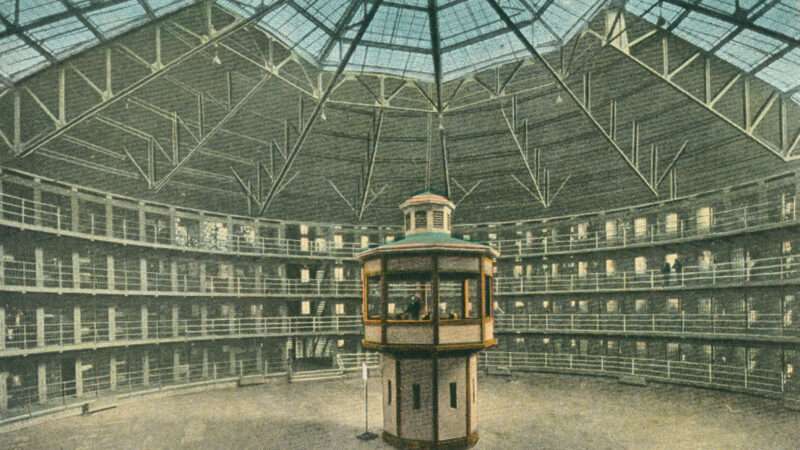

Big Brother—and Parent, and Teacher—are watching.

Across America, teachers are uploading students’ grades to digital portals on a weekly, daily, or sometimes hourly basis. They are posting not just grades on big tests, but quizzes, homework, and in-class work too. Sometimes teachers give points for day-to-day behavior in real time: Did he raise his hand before asking his question? No? Points are docked. Parents are notified. So are the kids.

The pupil panopticon starts in elementary school and just doesn’t stop.

In one high school, I am told, the grading portal changes color when the grade, even on a single assignment, pushes the kid’s average up (green) or down (red). This can fluctuate by the hour, which means so can a kid’s feelings of joy or despair. Parents can enjoy the same stomach-churning experience because they, too, have access to the portals, for better or worse.

“If I have to hear one more time from my wife about how our son isn’t going to college because he forgot to hand in a single homework assignment or did bad on ONE test I’m gonna fucking lose my mind!” is how one father expressed it on Reddit. “All it does is annoy the shit out of him, annoy the shit out of me, and damage his relationship with her. That’s it.”

That really is it. Even many of the parents who say the portal helps them keep their kids on track still admit it’s a source of stress. They get an extra helping of angst when they watch their kids nervously await the exposure of their grades.

This new school-to-parent pipeline allows parents to micromanage yet another aspect of their kids’ lives. They already track their kids’ locations, via devices and AirTags. And of course, they sign the kids up for organized activities, so the kids are always doing something adult-supervised and parent-approved. Now they have become an invisible presence in one place they used to be banished: the classroom. The message for parents is they should always be watching their kids, even as their kids grow up under a microscope, telescope, and periscope.

I asked for comments on the portals via Facebook. Many people who responded asked me not to use their full names because they’re upset about the system but don’t want their kids to suffer extra for their indiscretion. “My son has ADHD and minor anxiety and he is obsessed with the grade portal—he’s 11,” wrote Jen, a mom in Marshall, Texas. “When he’s waiting for a quiz or test grade, he’s constantly trying to check and refresh the page. It’s disturbing.”

Beth Tubbs is a therapist in the Pacific Northwest who sees a lot of young people with anxiety. She says these portals aren’t helping. When grades are available to students and their parents in real time, “Everything feels high stakes,” Tubbs says. She’s had tweens tell her, “I’m really anxious because I got a C on my geometry test and that means I’m not going to get into a good college.”

Since a lot of parents are just as anxious, the ever-present portals can create a feedback loop. The parents worry that if they aren’t on top of things, their child might not be successful. So they’re always checking the portal, which makes the child worry that any bad grade means the end. Repeat this day after day, and it starts to feel as if grade portals may be one unexamined reason kids’ anxiety is spiking.

It’s not just the high school juniors and seniors who are suffering. ClassDojo is a popular portal that allows teachers to grant and dock academic and behavior points starting with kids as young as age 5.

“Depending on the teacher’s updating habits, you may get pinged with updates throughout the day on how well your child is sharing, sitting crisscross applesauce, staying quiet when directed, and following other classroom expectations,” writes Devorah Heitner in her new book, Growing Up in Public.

The result can mean no let-up for the parent or the kid.

“Three weeks into my son’s kindergarten year, I’m already dreading any notification from this app,” a mom named Melissa wrote on an education blog about the app. “The only thing I hear are private messages about what he’s done wrong. My workday is spent dreading the notification from this horrible application, and I feel so defeated about school already. I can only imagine what my son’s feeling.”

The set-up is even making teachers anxious. One told me she accidentally gave a student a low grade on a quiz because of a typo. Within two minutes, the visibly upset student was asking about the quiz and had already been grounded by their mother. “The online Gradebook has had a….questionable.…impact for some students, parents, and teachers,” the teacher wrote.

The problem is that the portals have created a whole new student/teacher/parent equation, says Emily Cherkin, author of The Screentime Solution: A Judgment-Free Guide to Becoming a Tech-Intentional Family. Before she became “The Screentime Consultant,” Cherkin was a teacher from 2003–2015—that is, both before and after the advent of the portals. When they were introduced in about 2005, she says, she witnessed two things: Her students stopped asking her why they got something wrong on the test, and the parents started asking for them. The portals “triangulated something that shouldn’t have been triangulated,” Cherkin says.

Gone is the opportunity kids once had to daydream in class, or blow a quiz, or crack a joke. As for the parents, they’re almost forced into helicoptering—a fact some are starting to resent.

Melinda Wenner Moyer, author of How to Raise Kids Who Aren’t Assholes and mom of a seventh-grader in upstate New York, says, “I saw my son got a 30 and I brought it up casually like, ‘What happened with that social studies thing?’ And he said, ‘Mom, I’ve got it handled. It was a mistake and I’ve talked to the teacher about it, and I see PowerSchool [the portal] too. I’m on top of it and would appreciate it if you would trust me.'” Since then, says Moyer, she has made a concerted effort not to open the portal much, “and it really helped my relationship with my son.”

Autonomy is one of the three great needs in any human’s life (along with relatedness and competence). Giving kids some autonomy back could be enormously beneficial for both generations, which is why it’s time to seriously consider whether the portals are doing what they’re supposed to do: help students succeed.

Roseanne Eckert is a defense attorney in Orlando. Her son graduated high school in 2017. For a while, she writes, “I would check his grades at work and come home mad, while he didn’t even know the grade yet. I finally decided to stop it and we were all happier. The schools push the parents to be on top of the grades but it is a constant misery. Just say no!” For the record, Eckert adds: Her son was not a straight-A student in high school, but now he’s about to get his master’s degree in biomedical engineering.

So for anyone seeing a B- on that portal: Shut it down, take a deep breath, and wait a few minutes.

Or better still, years.

The post Do Schools Really Need To Give Parents Live Updates on Students' Performance? appeared first on Reason.com.

]]>

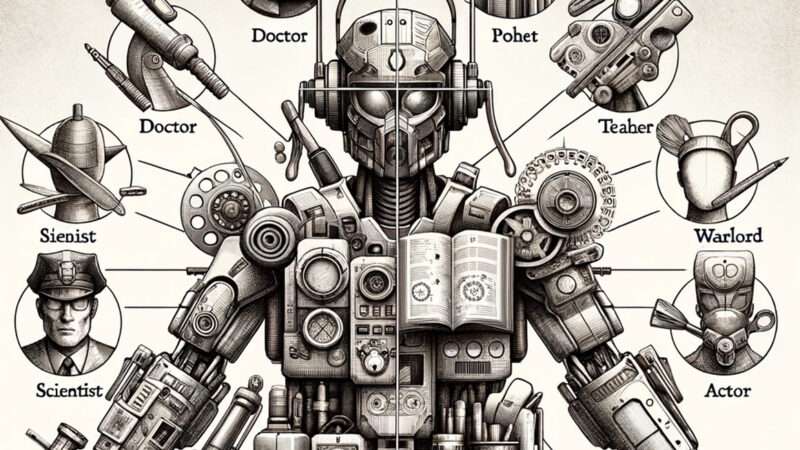

I’m an AI developer and consultant, and when OpenAI released a preview in February of its text-to-video model Sora—an AI capable of generating cinema-quality videos—I started getting urgent requests from the entertainment industry and from investment firms. You could divide the calls into two groups. Group A was concerned about how quickly AI was going to disrupt a current business model. Group B wanted to know if there was an opportunity to get a piece of the disruptive action.

Counterintuitively, the venture capitalists and showbiz people were equally split across the groups. Hollywood producers who were publicly decrying the threat of AI were quietly looking for ways to capitalize on it. Tech startups that thought they had an inside track to disrupting Hollywood were suddenly concerned that they were about to be disrupted by a technical advance they didn’t see coming.

This is the new normal: Even the disruptors are afraid they’re about to be disrupted. We’re headed for continuous disruption, both for old industries and new ones. But we’re also headed for the longest period of economic growth and lowest unemployment in history—provided we don’t screw it up.

As AI and robotics accelerate in capabilities and find their way into virtually every corner of our economy, the prospects for human labor have never been better. Because of AI-driven economic growth, demand for human workers will increase; virtually anyone wanting to enter the work force will have opportunities to find meaningful, well-compensated careers. How we look at work will change, and the continuous disruption will cause a lot of anxiety. But the upside will be social improvements to levels we cannot currently comprehend. Roles and jobs may shift more frequently, but it will be easier to switch and more lucrative to do so.

While some of my peers in artificial intelligence have suggested AI could eliminate the need for work altogether and that we should explore alternative economic models like a universal basic income, I think proposals like that don’t take into account the historic effect of automation on the economy and how economic growth increases the demand for labor.

History and basic economics both suggest that AI will not make human beings economically irrelevant. AI and robotics will keep growing the economy, because they continuously increase productivity and efficiency. As the economy grows, there’s always going to be a widening gap between demand and capacity. Demand for human labor will increase even when AI and robotics are superior and more efficient, precisely because there won’t be enough AI and robots to meet the growing needs.

Economic Growth Is Accelerated by Technology

The goal of commercial AI and robotics is to create efficiencies—that is, to do something more inexpensively than prior methods, whether by people or machines. You use an industrial robot to weld a car because a human welder would take too long and wouldn’t have near the precision. You use ChatGPT to help write a grant proposal because it saves you time and means you don’t have to pay someone else to help write it.

With an increase in efficiency, you can either lower prices or not lower prices and buy a private island. If you don’t lower prices, you run the risk of competition from someone who sees their own path to a private island through your profits. As Amazon’s Jeff Bezos once said, “Your margin is my opportunity.” In a free market, you usually don’t get to reap high margins forever. Eventually, someone else uses price to compete.

Along with this competition comes growth, which also drives innovation. The computer add-on boards used for the Halo and Call of Duty games turned out to be really useful for the kind of computations it takes to produce an AI like ChatGPT. Thanks to that quirk of mathematics, Nvidia was able to add $2 trillion to its market cap over the last five years, and we were saved from the drudgery of writing lengthy emails and other repetitive text tasks. Along with that market cap came huge profits. Nvidia is now using those profits to fund research into everything from faster microchips to robotics. Other large companies, such as Microsoft and Google, are also pouring profits into new startups focusing on AI, health, and robotics. All of this causes economic growth and cheaper and/or better goods.

Even with continuous technological disruption displacing and destroying other industries, the United States gross domestic product has more than doubled over the last 20 years, from $11 trillion to $27 trillion. If you compare the U.S. to the slightly more technophobic European Union, you can make the case that Europe’s limits to technologic growth—through legislation and through risk-averse investment strategies—is one of the factors causing slower economic growth (Europe’s growth rate was 45.61 percent compared with 108.2 percent in the U.S.).

This was the problem India created for itself after achieving independence in 1948. The government enacted so many laws to protect jobs (the “License Raj”) that it stalled the country’s economic development for decades, nearly lost millions to famine, and got eclipsed by the Chinese.

If technology is a driving force for economic growth, mixing in superintelligent AI means accelerated growth. Even if there are periods of technological stagnation—which is doubtful—applying current AI automation methods will improve efficiencies across industries. If H&R Block could replace 90 percent of its seasonal employees with AI, it would see its profits skyrocket, given that labor is its biggest expense. Those profits would be reallocated elsewhere, that would increase the potential for even more economic growth, and that would in turn create better opportunities for the accountants.

What about physical labor? Outsourcing jobs overseas is just the final step before they’re outsourced out of existence by robotics. If you don’t have to build your product on the other side of the planet, you have efficiency in both cost and time to market. The less time goods spend in shipping containers crossing the Pacific, the more available capital you have. More capital means more growth.

If the last several hundred years of economic history are any indication, AI and robotics are going to increase the total surface area of the economy faster than we can comprehend. The more intense the disruption—like the assembly line, electrical power, or the internet—the greater the gains. There’s not much evidence to expect anything other than huge economic growth if we continue to improve efficiency and see an acceleration as AI systems and robotics keep improving.

But what about the workers? A fast-growing economy alone doesn’t guarantee that every labor sector will benefit—but other factors come into play that might.

New Jobs at a Scale We Can’t Predict

While innovation may eliminate the need for certain kinds of labor in one sector of the economy (farm equipment reduced the demand for farmworkers) it usually comes with an increase in competition for labor in other areas (increased agricultural productivity helped drive the growth of industrialization and the demand for factory workers). This allows us to switch from lower-paying jobs to higher-paying ones. Higher-paying jobs generally mean ones where innovation either leverages your physical capability (moving from the shovel to a bulldozer) or amplifies your cognitive output (going from paper ledgers to electronic spreadsheets).

Predicting how this will happen is hard, because we are really bad at imagining the future. To understand where we are headed, we have to get out of the mindset that the future is just the present with robots and weird clothes.

The first photograph of a person is believed to have been taken in 1838. Imagine trying to explain to a portrait artist at that time that photography not only did not mean the death of his occupation, but that this invention would lead to an entirely new medium, motion pictures, where an artist like James Cameron would work with a crew of thousands to shoot Avatar (2009), a film that would cost (in unadjusted dollars) more to produce than the entire 1838 U.S. military budget and would gross more than the entire gross national product of that period. The number of people who worked directly on Avengers: Endgame (4,308) was more than half the size of the United States Army in 1838 (7,958).

The future is bigger than we can imagine.

Change is equally hard to comprehend. Two centuries ago, 80 percent of the U.S. population worked on farms. If you told one of those farmers that in 2024 barely 1 percent of the population would work on farms, he’d have a difficult time imagining what the other 79 percent of the population would do with their time. If you then tried to explain what an average income could purchase in the way of a Netflix subscription, airplane transportation, and a car, he’d think you were insane. The same principle applies to imagining life 50 years from now.

Amazon was already a public company in 1998, when the economist and future Nobel Prize winner Paul Krugman predicted: “By 2005 or so, it will become clear that the Internet’s impact on the economy has been no greater than the fax machine.” Amazon is now the second-largest employer in the United States, and its cloud service powers just about everything we now do online. Although we might be able to predict the possibility of disruption, accurately gauging the transformation it brings is still impossible.

While technology causes disruption across industries and shrinks many of them, it also expands the labor force in unexpected ways. A quarter-century ago, it may have seemed inconceivable that more people would work for a startup like Google than General Motors. Alphabet, Google’s parent company, now has 182,502 employees; GM has 163,000. More people work for Apple (161,000) than McDonald’s (150,000). Meta—Facebook’s parent company—has more employees than ABC, CBS, NBC, and Fox combined (67,000 vs. 66,000).

And they aren’t all programmers. At Microsoft, fewer than half the employees are software engineers. For a conglomerate like Amazon, the percentage is even less. Amazon has tens of thousands of people delivering packages, and Apple has human staff working in physical stores—despite the fact the company also sells online. While Amazon might try to shrink its human labor force via robotics, Apple is increasing it. When Apple launched retail stores, experts told them this was ill-advised, that shopping was all moving online. But Apple understood that some decisions required a physical presence and a human touch. If you want to talk to a Google or Meta employee, good luck. If you want to talk to someone from Apple, just go to your nearest shopping mall. Apple bet on technological innovations and human beings, and it now has greater net profit last year than Meta and Google combined ($100 billion).

The demand is so large for technically skilled people that companies are constantly pushing for an increase in the number of H-1B visas awarded each year. At any given time, the tech industry has approximately 100,000 unfilled jobs. Outside of bubbles and recessions, people laid off from tech companies generally find new jobs very quickly.

Economic growth also spurs new demand for traditional industries, such as construction. A million robots would barely make a dent upgrading the United States infrastructure, let alone globally. We’re going to need more human foremen and site supervisors than we’re capable of producing.

If we accept that the future economy is going to be much bigger than today’s and that entirely new categories of jobs will be created—even in companies working hard to replace us with robots—we still have to accept the argument that many current occupations will go away. The skills you and I currently possess may become obsolete. Yet there are reasons to believe people at all stages of their career paths will have an easier and more rewarding experience switching jobs than ever before.

The Retraining Myth

When President Joe Biden said that “Anybody who can throw coal into a furnace can learn how to program,” he might have been making a big assumption about what kind of labor the future will need and the types of jobs we will want. When we talk about job retraining, we should think about it in the context of an assembly-line worker learning how to do HVAC repair or a cashier learning how to do customer service for a car company.

Research on job retraining looks pretty bleak at first glance. The U.S. government spends about $20 billion a year on job programs and has very little data to support how effective that is. When you dig deeper into the data, you find that there’s very little correlation between dollars spent on these programs and wage increases among the people who use them. Because of this, most labor economists argue that job retraining doesn’t work.

Yet people learn new skills and switch careers all the time. Switching roles within a company requires retraining, and similar roles at different companies may be very different in practice. Retraining in practice works extremely well. What people really mean when they say “job retraining doesn’t work” is that it’s not that effective when the town factory closes and a government program materializes to help the unemployed workers find new jobs.

When you look into job retraining data, it becomes apparent that there’s not a single catchall solution that works in every situation for every person. The most effective efforts are ones that find close matches for skills by providing consultation and resources, offer hands-on apprenticeship training so people can adapt on the job, and ease people into new skills while they’re still employed. Artificial intelligence might end up playing a role here too: A study I commissioned while at Open-AI suggested that AI-assisted education can reduce the fear of embarrassment in learning new skills. ChatGPT will never judge you, no matter how dumb the question.

Those people who want well-paying careers and are willing to learn the skills will find jobs. By and large, even a 59-year-old won’t have trouble finding meaningful work.

If that still sounds like a stretch, consider this: We have solid data that in a high-growth economy, job retraining can pull differently skilled and previously unemployable people into the work force in record numbers. The lowest unemployment rate in U.S. history was 0.8 percent in October 1944. That basically meant everyone who wanted a job and wasn’t living in a shack surrounded by 100 miles of desert had a job. This included millions of women who didn’t previously have opportunities to work outside the home. They were put into factories and assembly lines to fill the gap left by soldiers sent overseas and helped expand our production to new levels that didn’t exist before.

Was World War II an outlier? Yes: It was a situation where there was so much demand for labor that we were pulling every adult we could into the work force. The demand in an AI-driven economy will be just as great, if not greater.

But won’t we just use AI and robots to fill all those gaps? The short answer: no. The demand for labor and knowledge work will always be greater than the supply.

Never Enough Computers and Robots

David Ricardo, the classical economist, explained more than 200 years ago why we shouldn’t fear robots taking over.

No, those weren’t his precise words. But his theory of comparative advantage explained that even when you’re able to produce something at extreme efficiency, it can make mathematical sense to trade with less-efficient producers. He used the example of why England should buy port wine from Portugal even though they could make it more cheaply domestically. If England made more profit on producing textiles, it made the most economic sense to dedicate its resources to textiles and use the surpluses to slightly overpay for wine from another country. It’s basic math, yet government economists will huddle around a conference room table arguing that you need to keep all production domestic while ordering out for a pizza instead of making it themselves—even if one of them happens to be a fantastic cook.

When OpenAI launched ChatGPT in November 2022, we had no idea what to expect. I remember sitting in on a meeting debating the impact this “low-key research preview” would have. We came to the conclusion that it would be minor. We were wrong: ChatGPT became an instant hit, and it soon had more than 100,000,000 users. It was the fastest adoption of an application by a startup in history. This was great, except for one problem: We couldn’t meet the demand.

There weren’t enough computers on the planet to handle all of the users wanting access to ChatGPT. OpenAI had to use its supercomputer clusters intended to train newer AI systems to help support the need for compute. As Google and other companies realized the market potential for AI assistants like ChatGPT, they began to ramp up their efforts and increased the demand for compute even more. This is why Nvidia added $2 trillion to its market cap. People quickly realized this demand wasn’t going to slow down. It was going to accelerate.

The goal of commercial AI is to efficiently replace cognitive tasks done in the workplace, from handling a customer service complaint to designing your fall product line. This means replacing neurons with transistors. The paradox is that once you maximize the efficiency of something like producing farming equipment, you end up creating new economic opportunities, because of the surpluses. Overall demand increases, not decreases. Even with robots building robots and AI creating new business opportunities, we’ll always be short of hands and minds. Even lesser-skilled human talent will be in demand. Just like we needed everyone to participate in the wartime economy, we didn’t reach near–zero unemployment because it was a nice thing to do; because of comparative advantage, it made the most economic sense.

When the Manhattan Project ran out of mathematicians, the government recruited from the clerical staff to do computations. The same happened at Bletchley Park with code breaking, and again two decades later at NASA. While today’s computers handle advanced computations so fast that they can solve a problem before you can explain it to a person, we now cram mathematicians into rooms with whiteboards and have them think up new things for the computers to do guided by our needs. AI won’t change that. Companies are actively building systems to function as AI researchers. They’ll eventually be smarter than the people who made them—yet that will lead to demand for even more human AI researchers.

Even people in AI have trouble understanding this argument. They can make persuasive cases why AI and robotics will supersede human capabilities in just about every way, but they give blank looks to arguments about why the demand for intelligence and labor will always be greater than the supply. They can imagine AI replacing our way of doing things, but they have trouble understanding how it will grow demand at such a rate we’ll still need dumb, clumsy people. The publicity around high-end computer shortages and the realization that we can’t meet present demand, let alone future demand, should hopefully make people consider this in practical economic considerations.

Conversations about how to shape a future economy with concepts like the universal basic income are worth having—but they’re trying to solve a problem that probably won’t exist in the way that some people foresee. Human beings will be a vital part of economic development well into the future.

Nobody in 1838 saw motion pictures or the likes of James Cameron coming, let alone the concept of a “video game.” Our near future is just as difficult to predict. But one thing seems certain: You might not need a job in 2074, but there will be one if you want it.

The post In the AI Economy, There Will Be Zero Percent Unemployment appeared first on Reason.com.

]]>

The censors who abound in Congress will likely vote to ban TikTok or force a change in ownership. It will likely soon be law. I think the Supreme Court will ultimately rule it unconstitutional, because it would violate the First Amendment rights of over 100 million Americans who use TikTok to express themselves.

In addition, I believe the Court will rule that the forced sale violates the Fifth Amendment. Under the Constitution, the government cannot take your property without accusing and convicting you of a crime—in short, without due process. Since Americans are part of TikTok’s ownership, they will eventually get their day in court.

The Court could also conclude that naming and forcing the sale of a specific company amounts to a bill of attainder, legislation that targets a single entity.

These are three significant constitutional arguments against Congress’ forced sale/ban legislation. In fact, three different federal courts have already invalidated legislative and executive attempts to ban TikTok.

If the damage to one company weren’t enough, there is a very real danger this ham-fisted assault on TikTok may actually give the government the power to force the sale of other companies.

Take, for example, Apple. As The New York Times reported in 2021, “In response to a 2017 Chinese law, Apple agreed to move its Chinese customers’ data to China and onto computers owned and run by a Chinese state-owned company.”

Sound familiar? The legislators who want to censor and/or ban TikTok point to this same law to argue that TikTok could (someday) be commanded to turn over American users’ data to the Chinese government.

Note that more careful speakers don’t allege that this has happened, but rather that it might. The banners of TikTok don’t want to be troubled by anything inconvenient like proving in a court of law that this is occurring. No, the allegation is enough for them to believe they have the right to force the sale of or ban TikTok.

But back to Apple. It’s not theoretical that it might turn over data to the Chinese Communist government. It already has (albeit, Chinese users’ information). Nevertheless, it could be argued that Apple, by their actions, could fall under the TikTok ban language that forces the sale of an entity: under the influence of a foreign adversary.

(Now, of course, I think such legislation is absurdly wrong and would never want it applied to Apple, but I worry the language is vague enough to apply to many entities.)

As The New York Times explains: “Chinese government workers physically control and operate the data center. Apple agreed to store the digital keys that unlock its Chinese customers’ information in those data centers. And Apple abandoned the encryption technology it uses in other data centers after China wouldn’t allow it.”

This sounds exactly like what the TikTok censors describe in their bill, except so far as we know, only Americans who live in China might be affected by Apple’s adherence to China’s law. TikTok actually has spent a billion dollars agreeing to house all American data with Oracle in Texas.

Are there other companies that might be affected by the TikTok ban? Commentary by Kash Patel in The Washington Times argues that Temu, an online marketplace operated by a Chinese company, is even worse than TikTok and should be banned. He makes the argument that Temu, in contrast with TikTok, “does not employ any data security personnel in the United States.”

And what of the global publishing enterprise Springer Nature? It has admitted that it censors its scientific articles at the request of the Chinese Communist government. Will the TikTok bill force its sale as well?

Before Congress rushes to begin banning and punishing every international company that does business in China, perhaps they should pause, take a breath, and ponder the ramifications of rapid, legislative isolationism with regard to China.

The impulse to populism is giving birth to the abandonment of international trade. I fear, in the hysteria of the moment, that ending trade between China and the U.S. will not only cost American consumers dearly but ultimately lead to more tension and perhaps even war.

No one in Congress has more strongly condemned the historical famines and genocides of Communist China. I wrote a book, The Case Against Socialism, describing the horrors and inevitability of state-sponsored violence in the pursuit of complete socialism. I just recently wrote another book called Deception, condemning Communist China for covering up the Wuhan lab origins of COVID-19.

And yet, even with those searing critiques, I believe the isolationism of the China hysterics is a mistake and will not end well if Congress insists on going down this path.

The post If They Ban TikTok, Is Apple Next? appeared first on Reason.com.

]]>

Just last month, Seattle’s disastrous attempt to enact a minimum wage for app-based food delivery drivers was in the news. The result was $26 coffees, city residents deleting their delivery apps, and drivers themselves seeing their earnings drop by half. Now, the Minneapolis City Council has decided to join the fray in the multifront progressive war against the gig economy—and this time, the outcome could be even worse.

In March, the Minneapolis City Council enacted an ordinance that creates a minimum wage rate for ride-share drivers in the city. It does so via a per-minute and per-mile calculation, which is currently set at $1.40 per mile and $0.51 per minute. It also sets a floor of $5 if the trip is short and otherwise would cost below that level.

The council claims it enacted the ordinance to ensure that ride-share drivers in the city were paid at an amount analogous to the city’s $15.57 per hour minimum wage. Even putting aside the traditional economic arguments against the minimum wage—see California’s recent fast-food minimum wage law as Exhibit A—the council’s logic fails on its own terms. The day after the city council initially passed the ordinance, the state Department of Labor and Industry released a report showing that a lower $0.89 per mile and $0.49 per minute rate would be sufficient to make driver pay equivalent to the $15.57 minimum wage.

As a result, the ordinance was immediately vetoed by Minneapolis’ liberal mayor—the second time in two years the mayor has vetoed such a measure from the council—only for the council to then override the veto a week later. While the council did not have access to the state’s report for the first vote, it had over a week to review it before the veto-override vote. Incredibly, one city council member even suggested that the state’s report somehow convinced her to change her vote from “no” to “yes” on the minimum wage between the initial vote and the override vote.

In response to the council’s override, ride-sharing companies like Uber and Lyft have announced they are planning to pull out of the Minneapolis market entirely unless the council reverses course. The ride-share companies originally were set to leave the city on May 1 when the ordinance went into effect, but after a last-minute agreement by the council to delay the ordinance’s effective date to July 1, the ride-share companies are in wait-and-see mode.

If the council refuses to back down by July, it will cause even deeper ramifications for city residents than the higher food prices that Seattleites saw in the wake of their aforementioned minimum wage hike for delivery drivers. The ride-share companies have indicated that while they would support the minimum compensation levels proposed in the state’s study, the city’s higher rates are cost-prohibitive.

Panic has set in among many lawmakers at the state capital, with some calling for the Legislature to preempt the Minneapolis ordinance. Democratic Gov. Tim Walz, who previously vetoed a statewide version of a minimum wage bill for ride-share drivers, has stated that he is “deeply concerned” about the prospect of losing ride-sharing services in the Twin Cities.

The concern is well-founded since a ride-share pullout would disproportionately impact the city’s senior citizens and disabled residents who often rely on these services to survive. Accordingly, advocates from the Minnesota chapter of the National Federation of the Blind, the Minneapolis Advisory Committee on Aging, and the Minneapolis Advisory Committee on People with Disabilities have all expressed opposition to the ordinance.

The possibility of losing ride-sharing has also created concern about the potential impact on the city’s drunk driving rates. Evidence has linked the availability of ride-sharing to lower incidents of alcohol-impaired driving and alcohol-related car accidents, underscoring just how high the stakes may be.

Moreover, if the city council’s move goes unchecked, deleterious minimum wage hikes will inevitably spread to other parts of the Twin Cities’ gig economy. The Minneapolis ordinance is limited to ride-share drivers for now, but if the past is prologue, food delivery drivers are next.

Seattle first passed a minimum wage rule for ride-share drivers in 2020, only to follow that up with this year’s food delivery minimum rate. New York City likewise followed a similar two-step trajectory of locking in minimum rates for ride-share drivers before moving on to food delivery drivers years later. Given that many ride-share drivers double as food delivery drivers—often on the same app—the progressive pressure to expand the minimum wage to delivery may be substantial.

Also of note, the Minnesota Legislature is considering a bill that would make it more difficult to be classified as an independent contractor in the state, creating yet more foreboding storm clouds on the horizon for gig work.

Despite the fresh lessons from the Seattle food delivery debacle, Minneapolis council members appear oblivious to the on-the-ground reality. Ironically, it was none other than Karl Marx who famously declared that history repeats itself “first as tragedy, second as farce.” The city council—which contains several openly socialist members—should pay more heed to its intellectual forefather.

The post Minneapolis Is About To Kill Ride-Sharing appeared first on Reason.com.

]]>

A majority of American parents want kids to have access to their phones at school, a new survey finds. In addition, most parents think cellphones have a positive effect on their kids’ lives.

Debates over teens and smartphones often contain the (assumed or explicit) premise that parents want their kids to stop living what author and social psychologist Jonathan Haidt calls a “phone-based childhood.” Popular wisdom today says parents think phones are negatively impacting their childrens’ lives and want kids to have less access to phones but feel powerless to change the situation—a premise baked into Haidt’s new book, The Anxious Generation: How the Great Rewiring of Childhood Is Causing an Epidemic of Mental Illness (read my review here).

But a survey conducted earlier this month by the National Parents Union challenges this narrative.

Phones—the Perfect Vehicle for Helicopter Parenting

In The Anxious Generation, Haidt looks at the rise of various problems among young people and pinpoints two interlocking culprits: the ascendancy of a “phone-based childhood” and the decline of a “play-based childhood.” Many folks see this as a simple one-way flow: phones came along and simply wiped out time or motivation for other pursuits. Haidt suggests a somewhat different sequence of events. As parents began to fear everything in the offline world (and instill this fear into their children), individual families and American society more broadly started denying children independence, autonomy, and unstructured free time. “Screen time” started to fill a void that parents, politicians, police, and our culture as a whole had already created.

The new National Parents Union survey perfectly illustrates the way fear-based parenting is driving phone-based childhoods.

In the survey—conducted in February among 1,506 parents of public school students grades K-12—66 percent of parents said their kids have a cell phone and most of these kids (79 percent) take their phones to school. Asked why parents’ wanted this, the most common answers were so that kids could “use their phone if there is an emergency” (79 percent agreed) and so parents could get in touch with their children “or find out where they are when needed” (71 percent). Forty percent said it was important for communicating with kids “about their mental health or other needs during the day.”

In other words, a lot of parents want their kids to have phones at school because these parents are anxious, afraid, and/or overzealously policing their progeny’s whereabouts and feelings.

Hat tip to Bonnie Kristian for first identifying this paradox. “It is increasingly fashionable to talk about the risk phones pose to American kids, especially teenage girls,” writes Kristian on Substack:

The dysfunction of the phone-based childhood has become impossible to ignore, thanks in no small part to Haidt’s own work. We’re all saying it: Make the kids put down their phones at dinner! Ban phones in school! Kick teenagers off social media or confine them to flip phones or take the phones away altogether!

But then there’s the second level: When push comes to shove, whatever ideals they may spout about rejecting the phone-based childhood, average American parents want their middle and high schoolers to have phones, preferably smartphones with location tracking kept on their persons at all times.

Hey, Teachers, Leave Those Phones Alone

It seems parents are as attached to their kids having phones as their kids are. In light of this, it’s unsurprising that many parents frown on policies that totally deny kids access to phones at school (even though the popular/political narrative around kids and phones suggests this is what parents want).

Fifty-six percent of the people surveyed by the National Parents Union said “students should sometimes be allowed to use their cell phones” in school, while just 32 percent said “students should be banned from using their cell phones, unless they have a medical condition or disability for which they need to use a cell phone.”

Even among the group who said most students should be banned from using phones, only 30 percent wanted this ban to apply broadly (i.e., outside of class). Most said phones should be banned during academic instruction but allowed at other times, such as during lunch or recess or during periods between classes.

In keeping with this, relatively few of the parents surveyed supported school policies that keep kids’ entirely away from their phones during the day.

Fifteen percent said schools should “require students to place their cell phones in a central location in their classroom, such as a cubby or holder, but don’t lock them up” and 14 percent said they should “require students to place their cell phones in a locked cabinet or cell phone lockers in their classroom.” Another 8 percent said schools should “lock up students’ cell phones in secure pouches or containers that they can carry with them but that prevent them from using their phone.”

The most popular answer—shared by 59 percent of the parents surveyed—was that schools should “allow students to keep their phones in their backpack or bag (not locked

up) as long as they don’t take them out and keep them on silent.”

Reassuringly, very few people (5 percent) think the federal government should make decisions about school phone policies and only 10 percent say it should be a state-level government decision.

Most parents think phone policies should be made at the school district level (29 percent), the school level (28 percent), or at the classroom level (18 percent).

The Upsides of Screen Time

Some of the data in this survey fits popular narratives about kids and phones, like the ideas that they’re starting young and spending a lot of time on them.

Among parents who allowed their children to have cellphones, the most popular ages to have given phones to them was between 10 and 13 years old. (The survey does not say what type of phones were given, so it’s possible many kids received dumb phones to start.) Only 13 percent of parents waited until a kid was age 14 or older.

Among those whose kids had cell phones, only 18 percent estimated that their child spends less than 2 hours per day on it. Some 28 percent estimated that their kid spends between 2 and 3 hours per day on their phones, with 29 percent suggesting their kid uses it for 4 or 5 hours per day, 12 percent saying 6 or 7 hours per day, and 9 percent saying their kids are on phones for upwards of 8 hours per day.

And yet, most parents seem pretty unalarmed by this phone usage. Just 9 percent said phones had a mostly or entirely negative effect on their kids.

Nearly half—46 percent—said the phone had a mostly or entirely positive effect on their child, while 42 percent said the effect was “about equally positive and negative.”

This stands out as at odds with what we commonly hear in the media and from legislators about how parents view kids’ phone use. But it’s in keeping with what many kids themselves say. In a 2022 survey of American 13- to 17-year-olds, conducted Pew Research Center, kids identified all sorts of plus sides to social media (which is, of course, one of the main things that kids use phones for). And a majority—59 percent—said social media is neither a negative nor a positive in their lives, while 32 percent said it’s mostly positive and just 9 percent said it’s mostly negative.

It’s also in keeping with some earlier research on phone adoption among kids. For instance, a 2022 study from Stanford Medicine researchers followed 250 tweens and teens for five years during which most got a first cellphone, tracking study participants’ well-being during this transition. The kids were 7 to 11 years old when the study started and 11 to 15 years old when it ended. The researchers “found that whether or not the children in the study had a mobile phone, and when they had their first mobile phone, did not seem to have meaningful links to their well-being and adjustment outcomes,” according to lead author Xiaoran Sun.

More Sex & Tech

• Florida Gov. Ron DeSantis signed a law restricting teen use of social media platforms. Under the new law, 14- and 15-year-olds can old start social media accounts with their parents’ permission. “This law puts all users’ privacy at risk by mandating age verification,” said Competitive Enterprise Institute’s director of the Center for Technology & Innovation, Jessica Melugin. It also “ignores parents’ rightful role in deciding what is and is not appropriate for their child, and may sacrifice too much of the free flow of speech to be constitutional. It’s political click bait, but it’s not good public policy.”

• California lawmakers are considering a bill that would require large online platforms to verify the identities of “influential” users. Influential here is defined to include basically any user that’s been at it for a while (that is, if content they’ve shared “has been seen by more than 25,000 users over the lifetime of the accounts that they control or administer”).

• Mother Jones has an interesting interview with Lynn Paltrow, founder of the National Advocates for Pregnant Women (now called Pregnancy Justice). “For much of the past 50 years, the mainstream pro-choice groups were focused almost exclusively on the right to abortion,” said Paltrow, who believes this was a mistake. “There was no campaign to explain abortion as necessary to the full equality and citizenship—the personhood—of women in this country. They were defending abortion as opposed to the people who sometimes need abortions but always need to be treated as full constitutional persons under the law, whatever the outcome of their pregnancies.”

Today’s Image

The post Parents Don't Want Schools to Confiscate Kids' Phones appeared first on Reason.com.

]]>

The Austrian economist Friedrich Hayek wanted to denationalize money. David Chaum, an innovator in the field of cryptography and electronic cash, wanted to shield it from surveillance. Their goals were not the same, but they each inspired the same man.

Max O’Connor grew up in the British city of Bristol in the 1960s and ’70s. Telling his life story to Wired in 1994, he explained how he had always dreamed of a future where humanity expanded its potential in science-fictional ways, a world where people would possess X-ray vision, carry disintegrator guns, or walk straight through walls.

By his teenage years, O’Connor had acquired an interest in the occult. He thought the key to realizing superhuman potential could perhaps be found in the same domain as astral projection, dowsing rods, and reincarnation. But he began to realize there was no compelling evidence that any of these mystical practices actually worked. Human progress, he soon decided, was best served not by the supernatural but by science and logic.

He was a keen student, and especially interested in subjects concerning social organization. By age 23, he’d earned his degree in philosophy, politics, and economics from St. Anne’s College, Oxford.

The fresh Oxford graduate aspired to be a writer, but the old university town with its wet climate, dark winters, and traditional British values wasn’t providing the energy or inspiration he was looking for. It was time to go somewhere new—somewhere exciting. In 1987, he was awarded a fellowship to a Ph.D. program in philosophy at the University of Southern California (USC). He was moving to Los Angeles.

O’Connor immediately felt at home in the Golden State. The sunny L.A. weather was an obvious upgrade from gray Oxford. And in stark contrast to the conservative mindset prevalent in Great Britain, the cultural vibe on America’s West Coast encouraged ambition. Californians celebrated achievement, they respected risk taking, and they praised movers and shakers.

Here, O’Connor would start a new life as a new man. To commemorate the fresh start, he decided to change his name; from then on, Max O’Connor would be “Max More.”

“It seemed to really encapsulate the essence of what my goal is: always to improve, never to be static,” he explained. “I was going to get better at everything, become smarter, fitter, and healthier. It would be a constant reminder to keep moving forward.”

FM-2030

In California, unlike staid England, More found that he wasn’t alone in his interest in expanding human potential. One of More’s colleagues at USC, a Belgian-born Iranian-American author and teacher known originally as Fereidoun M. Esfandiary but now going by the name “FM-2030,” had spent the ’70s and ’80s popularizing a radical futurist vision.

New technologies would allow engineers to dramatically change the world for the better, FM-2030 predicted. He believed that any risks associated with technological innovation would be offset by the rewards: Solar and atomic power would bring energy abundance, people would colonize Mars, robot workers would increase leisure time, and teleworking would allow people to earn a living from the comfort of their homes.

FM-2030 predicted that technology would soon reach the point where it could drastically improve not just human circumstances but human beings themselves. Health standards would advance as more diseases could be cured and as genetic flaws could be corrected; future pharmaceuticals could boost human potential by, for example, enhancing brain activity.

FM-2030 expected that medical science would even “cure” aging, doing away with finite human life spans, gifting us with bionic body parts and other artificial enhancements. By his estimation, humanity would conquer death around his 100th birthday, in the year 2030. (That’s what the number in his name referred to.) FM-2030 predicted that we would eventually turn ourselves into synthetic post-biological organisms. “It’s just a matter of time before we reconstitute our bodies into something entirely different, something more space-adaptable, something that will be viable across the solar system and beyond,” he wrote in 1989.

Transhumanism

To most, those sort of predictions sounded fantastical. But when a research affiliate at the MIT Space Systems Laboratory named K. Eric Drexler in the early 1980s described a technique for manufacturing machinery on a molecular level, the fantastical was already starting to sound a little less implausible. Nanotechnology, Drexler believed, could fundamentally change industries including computing, space travel, and any variety of physical production.

Drexler believed that nanotech could revolutionize health care too. Physical disorders are typically caused by misarranged atoms, as he saw it, and he imagined a future where nanobots could enter the human body to fix this damage—in effect restoring the body to full health from within. Nanotechnology would thus be able to cure just about any disease and ultimately extend life itself.

“Aging is fundamentally no different from any other physical disorder,” Drexler wrote in his 1986 book Engines of Creation; “it is no magical effect of calendar dates on a mysterious life-force. Brittle bones, wrinkled skin, low enzyme activities, slow wound healing, poor memory, and the rest all result from damaged molecular machinery, chemical imbalances, and mis-arranged structures. By restoring all the cells and tissues of the body to a youthful structure, repair machines will restore youthful health.”

For Max More, such ideas weren’t just fun speculation. He believed these predictions offered a fresh and necessary perspective on human existence, even on reality itself. As More collected, studied, and thought about the concepts these futurists had been sharing, the Ph.D. candidate formalized them into a new and distinct philosophical framework: transhumanism.

The general idea and term transhumanism had already been used by evolutionary biologist Julian Huxley in the 1950s, but More now used it to denote an updated version of the humanist philosophy. Like humanism, transhumanism respects reason and science while rejecting faith, worship, and supernatural concepts such as an afterlife. But where humanists derive value and meaning from human nature and existing human potential, transhumanists anticipate and advocate transcending humanity’s natural limitations.

“Transhumanism,” More wrote in 1989, “differs from humanism in recognizing and anticipating the radical alterations in the nature and possibilities of our lives resulting from various sciences and technologies such as neuroscience and neuropharmacology, life extension, nanotechnology, artificial ultra-intelligence, and space habitation, combined with a rational philosophy and value system.”

Extropianism

Specifically, More believed in a positive, vital, and dynamic approach to transhumanism; he favored a message of hope, optimism, and progress. But he did not believe that this progress could be forced or even planned. He rejected Star Trek–like visions of the future where humanity settles under a single, all-wise world government to guide the species forward.

Instead, More believed transhumanists could benefit from Hayek’s libertarian insights. Technological innovation requires knowledge and resources. As Hayek explained, the former is naturally distributed throughout society, while the latter is best allocated through free market processes that reveal that knowledge and how it matches freely chosen human desires. If people are allowed the liberty to experiment, innovate, and collaborate on their own terms, More figured, technological progress would naturally emerge. In other words, a more prosperous tomorrow was best realized if society could self-organize as a spontaneous order today.

More found an early ally in fellow USC graduate student Tom W. Bell. Like More, Bell adopted the transhumanist philosophy and favored More’s joyful and free approach to achieve it. He decided that he would help spread these novel ideas by writing about them under his own new future-looking pseudonym: Tom Morrow.

To encapsulate their vision, Morrow coined the term extropy. An antonym of entropy—the process of degradation, of running down—extropy stood for improvement and growth, even infinite growth. Those who subscribed to this vision were extropians.

More outlined the foundational principles for the extropian movement in a few pages of text in “The Extropian Principles: A Transhumanist Declaration.” It included five main principles: boundless expansion, self-transformation, dynamic optimism, intelligent technology, and—as an explicit nod to Hayek—spontaneous order. Abbreviated, the principles formed the acronym B.E.S.T. D.O. I.T. S.O.

“Continuing improvements means challenging natural and traditional limitations on human possibilities,” the essay declared. “Science and technology are essential to eradicate constraints on lifespan, intelligence, personal vitality, and freedom. It is absurd to meekly accept ‘natural’ limits to our life spans. Life is likely to move beyond the confines of the Earth—the cradle of biological intelligence—to inhabit the cosmos.”

Like the transhumanist vision that drove it, the extropian future was ambitious and spectacular. Besides life extension, arguably the central pillar of the movement, extropian prospects included a wide array of futurist technologies, ranging from artificial intelligence to space colonization to mind uploading to human cloning to fusion energy.

Importantly, extropianism had to remain rooted in science and technology—even if in often quite speculative forms. Extropians had to consider how to actualize a better future through critical and creative thinking and perpetual learning.